This is an edition of Up for Debate, a newsletter by Conor Friedersdorf. On Wednesdays, he rounds up timely conversations and solicits reader responses to one thought-provoking question. Later, he publishes some thoughtful replies. Sign up for the newsletter here.

Question of the Week

To complete this week’s question I had a conversation with OpenAI’s chatbot, GPT-3 (which anyone can try). “Every week I ask readers of my newsletter a different question,” I wrote. “Would you compose this week’s question on the subject of AI, choosing one that is likely to elicit the highest number of interesting responses?” GPT-3 responded, in part, with this suggestion:

Sure! Here is a question that might elicit a high number of interesting responses: How do you think AI will change the way we live and work in the next decade?

This question seems like asking, circa 1995, how the internet would change the way we live and work. When you respond, know that people of the future will look back with interest on your predictions!

Send your responses to conor@theatlantic.com.

Conversations of Note

In addition to prompting GPT-3 to generate this week’s question, I interviewed it about the other OpenAI tool that I’ve been testing out, DALL-E, an artificial-intelligence program capable of generating original images from text descriptions. “It has the potential to significantly improve the efficiency of image creation,” GPT-3 told me, with applications in advertising, design, entertainment, art work, and other creative enterprises.

As an example, I asked DALL-E to generate images of four Looney Tunes characters as if they were starring in a Wes Anderson movie. Here is the star-studded cast:

Yosemite Sam:

Bugs Bunny:

Wile E. Coyote:

And the Roadrunner:

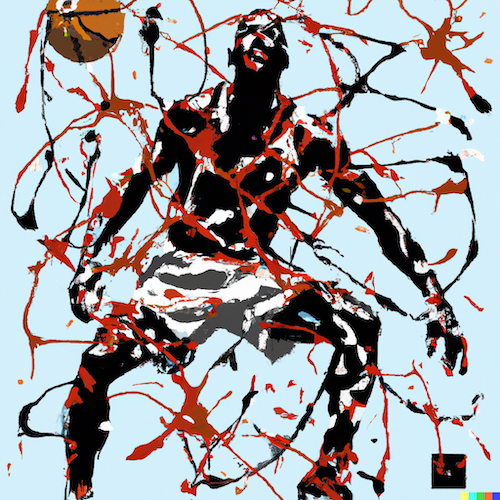

I also asked DALL-E to generate Michael Jordan posters in different styles. Here’s one in the style of Jackson Pollock:

Just as interesting were the results when I deployed a trick I picked up at a recent Atlantic event in Los Angeles: asking the text-based ChatGPT to help write better prompts for an image-generating AI. For example, say I was trying to come up with ideas to decorate my living room. If I ask DALL-E to generate “a living room that would be good for reading in” I get this:

Whereas if I ask GPT-3 to help me to write a better prompt for DALL-E, I get this: “Show me a living room with comfortable seating, good lighting, and plenty of shelving for books, that would be the perfect place to relax and get lost in a good book. Include a fireplace, a view of the outdoors, and a quiet and peaceful atmosphere.” Pasting that into DALL-E generates this:

You can play with DALL-E on your own, too, and if you do you’ll quickly discover how expansive its potential use cases are. I’ll be eager to hear your various thoughts by email. My prediction is that, for a long stretch of time to come, the use of text and image content generated by AI platforms plus human prompts will outstrip that by AI alone, or by humans alone, across many applications.

Is Writing Still an Important Skill to Learn?

Daniel Herman, who teaches various high-school humanities classes, reflects in The Atlantic on advances in artificial intelligence that can “generate sophisticated text in response to any prompt you can imagine.” The technology “may signal the end of writing assignments altogether—and maybe even the end of writing as a gatekeeper, a metric for intelligence, a teachable skill,” he argues:

If you’re looking for historical analogues, this would be like the printing press, the steam drill, and the light bulb having a baby, and that baby having access to the entire corpus of human knowledge and understanding. My life—and the lives of thousands of other teachers and professors, tutors and administrators—is about to drastically change.

… This semester I am lucky enough to be teaching writers like James Baldwin, Gloria Anzaldúa, Herman Melville, Mohsin Hamid, Virginia Held. I recognize that it’s a privilege to have relatively small classes that can explore material like this at all. But at the end of the day, kids are always kids. I’m sure you will be absolutely shocked to hear that not all teenagers are, in fact, so interested in having their mind lit on fire by Anzaldúa’s radical ideas about transcending binaries, or Ishmael’s metaphysics in Moby-Dick.

To those students, I have always said: You may not be interested in poetry or civics, but no matter what you end up doing with your life, a basic competence in writing is an absolutely essential skill—whether it’s for college admissions, writing a cover letter when applying for a job, or just writing an email to your boss. I’ve also long held, for those who are interested in writing, that you need to learn the basic rules of good writing before you can start breaking them—that, like Picasso, you have to learn how to reliably fulfill an audience’s expectations before you get to start putting eyeballs in people’s ears and things.

I don’t know if either of those things is true anymore. It’s no longer obvious to me that my teenagers actually will need to develop this basic skill, or if the logic still holds that the fundamentals are necessary for experimentation. Let me be candid (with apologies to all of my current and former students): What GPT can produce right now is better than the large majority of writing seen by your average teacher or professor … I believe my most essential tasks, as a teacher, are helping my students think critically, disagree respectfully, argue carefully and flexibly, and understand their mind and the world around them. Unconventional, improvisatory, expressive, meta-cognitive writing can be an extraordinary vehicle for those things. But if most contemporary writing pedagogy is necessarily focused on helping students master the basics, what happens when a computer can do it for us?

Will “Creative” AIs Increase Returns to Excellence?

That is the writer Virginia Postrel’s guess, as she notes in her Substack newsletter:

While crashing the value of mediocrity, ChatGPT could increase the returns to excellence. (“Average is over,” as Tyler Cowen put it.) Think about what happened to graphic design. Many people used to make a living doing routine tasks, from laying out pages to selecting typefaces, that are now easily handled by software. Thanks to the graphic intelligence embedded in everyday tools, the standards for routine graphics, from websites and PowerPoint presentations to restaurant menus and wedding invitations, have increased.

But that doesn’t mean there’s no work for graphic designers with the conceptual chops to take on complicated tasks. Powerful tools make iteration and brainstorming easier, but cleverness is still a valued skill. When my friend Shikha Dalmia launched The Unpopulist on Substack, she asked me to look at some logos she’d come up with using easily available tools.

They weren’t terrible, but neither were they distinctive. “Hire a professional,” I advised, and she got a real logo … Mediocre writing that earns grade-inflated Bs is now replaceable by a bot. Maybe if those B-essay students started with AI-generated prose it would be easier to teach them to do better: to refine the ideas, dig down more on the facts, improve the writing style. Can ChatGPT be a time-saving tool, like a calculator or text search, rather than a threat?

Will Humans Have Inflated Confidence in AI?

Louis Rosenberg expresses that worry at Big Think:

Personally, my biggest concern about Generative AI systems is that we humans may assume that their informational output is accurate because it came from a computer. After all, most of us grew up watching shows and movies like Star Trek where characters verbally ask computers for information and instantly get accurate and trustworthy results. I even can hear Captain Picard in my head barking out a command like, “Computer, estimate how long it will take for us to catch up with that space probe.” And an authoritative answer comes back. Everyone believes it. After all, it’s from a computer.

But here’s the problem: Generative AI systems are trained on massive sets of human documents that are not comprehensively vetted for accuracy or authenticity. This means the training data could include some documents that are filled with misinformation, disinformation, political bias, or social prejudice. Because of this, ChatGPT and other systems include disclaimers like, “May occasionally generate incorrect information,” and, “May occasionally produce harmful instructions or biased content.” It’s great that they tell you this up front, but I worry people will forget about the disclaimers or not take such warnings seriously. These current systems are not factual databases; they are designed to imitate human responses, which could easily mean imitating human flaws and errors.

I’ve noticed some inaccuracies in my own experiments. For example, you’ll frequently hear people declare, “hate speech is not free speech.” That is incorrect––“hate speech” is not a legal category, and lots of hateful speech and expression is protected by the First Amendment. But Chat GPT-3 kept telling me that hate speech is not protected by the First Amendment.

A Contradiction at the Core of the American Dream

In an article titled “The Homeownership Society Was a Mistake,” my colleague Jerusalem Demsas argues:

At the core of American housing policy is a secret hiding in plain sight: Homeownership works for some because it cannot work for all. If we want to make housing affordable for everyone, then it needs to be cheap and widely available. And if we want that housing to act as a wealth-building vehicle, home values have to increase significantly over time. How do we ensure that housing is both appreciating in value for homeowners but cheap enough for all would-be homeowners to buy in? We can’t.

What makes this rather obvious conclusion significant is just how common it is for policy makers to espouse both goals simultaneously. For instance, in a statement last year lamenting how “inflation hurts Americans pocketbooks,” President Joe Biden also noted that “home values are up” as a proof point that the economic recovery was well under way.

So rising prices are bad, except when it comes to homes.

Policy makers aren’t unaware of the reality that quickly appreciating home prices come at the cost of housing affordability. In fact, they’ve repeatedly picked a side, despite pretending otherwise. The homeowner’s power in American politics is unmatched. Rich people tend to be homeowners and have an outsize voice in politics because they are more likely to vote, donate, and engage in the political process.

Provocation of the Week

This week’s subject is pet adoption:

As a society, we have long been encouraged to adopt pets as a way to provide homes for animals in need and reduce the number of homeless pets. However, upon closer examination, the act of adoption raises a number of serious concerns. First and foremost, adoption perpetuates a system of overpopulation and exploitation. By adopting a pet, we are essentially filling a demand for more animals and contributing to the cycle of breeding and disposability. It is estimated that there are already more than enough pets in the world to meet the demand, yet we continue to breed and produce more.

Additionally, adoption can be a risky and uncertain process. When we adopt a pet, we often do not know their full history or any potential behavioral or medical issues they may have. This can lead to unexpected costs and challenges in care, as well as the potential for harm to ourselves and others. Furthermore, adoption can be a superficial and self-serving act. By adopting a pet, we often do so for our own benefit and convenience, rather than considering the needs and well-being of the animal. This can lead to a lack of commitment and responsibility on the part of the adopter, resulting in a high rate of animal abandonment and neglect.

In conclusion, while adoption may seem like a noble and compassionate act, it is ultimately a flawed and irresponsible approach to addressing the issue of homeless pets. Instead of perpetuating a system of overproduction and exploitation, we should focus on addressing the root causes of pet homelessness and promoting more ethical and sustainable alternatives.

If you haven’t guessed by now, that, too, was generated by chat GPT-3, given the prompt “write an argument against adoption.” That is the last appearance AI-generated words will make in this newsletter, and I personally encourage you to adopt a dog at the earliest viable opportunity!